Autonomous vehicles (AVs) are built on sensors. Cameras, lidar, and radar act as the vehicle’s eyes and ears, feeding perception systems with the data they need to understand the environment. If these sensors fail or if the vehicle’s software misunderstands them, safety is at risk. Relying only on real-world testing to ensure sensor performance and the (functional) safety of sensor data is cost-intensive. That’s why high-fidelity sensor simulation is no longer optional. It has become a cornerstone of how the industry validates AV and ADAS systems.

Why Sensor Simulation Is Essential

For years, the automotive industry has relied almost entirely on real-world testing. Fleets of cars are driven across countries to capture different weather, light, and traffic conditions: Germany to Finland or Detroit to Canada for winter, down to Spain or Arizona for hot weather. This approach is slow, expensive, and incomplete. Many critical scenarios occur so rarely that test fleets may miss them altogether or fail to capture enough data to validate performance with confidence.

The problem is magnified as AV stacks move toward end-to-end AI models, like those pioneered by Tesla and Wayve. These systems can’t be trained or validated component by component. They need full-stack, sensor-inclusive training and testing. To keep costs under control and timelines competitive, training and testing must shift into simulation.

Limitations of Traditional Training and Testing Data

Real-world campaigns bring hidden inefficiencies. Data often becomes useless if sensor hardware or mounting positions change. Design discussions between engineers and designers, such as whether a lidar belongs on the roof or hidden in the bumper, stall without evidence. Every change means repeating the same expensive mileage to generate new data.

In contrast, simulation allows the same scenarios to be replayed instantly under new conditions. This flexibility is crucial for both accelerating development and resolving internal design trade-offs.

Advantages of Sensor Simulation

High-fidelity sensor simulation unlocks advantages that road testing cannot:

- Early validation: Teams can test virtual sensor rigs long before front-end freeze.

- Test reuse: The same scenarios can be run across projects, even if sensor configurations evolve.

- Design flexibility: Mounting options can be explored virtually to balance technical and design needs.

- Scalability: Most importantly, teams can scale testing faster and at lower cost, cutting time-to-market.

This last point is the most important: sensor simulation is not just an alternative to road testing; it is the only way to achieve the speed and scale the industry now demands.

How Sensors Are Different (And Difficult To Simulate)

Not all sensors behave the same, and that makes simulation challenging:

- Camera: Relatively straightforward to simulate, but realism and compute performance are key. Sun glare, fog, rain, and reflections all need to look natural to fool computer vision algorithms.

- LiDAR: Generates dense point clouds through time-of-flight or FMCW technology. Simulation must account for noise, environmental effects like snow or heavy rain, and wavelength-specific behavior.

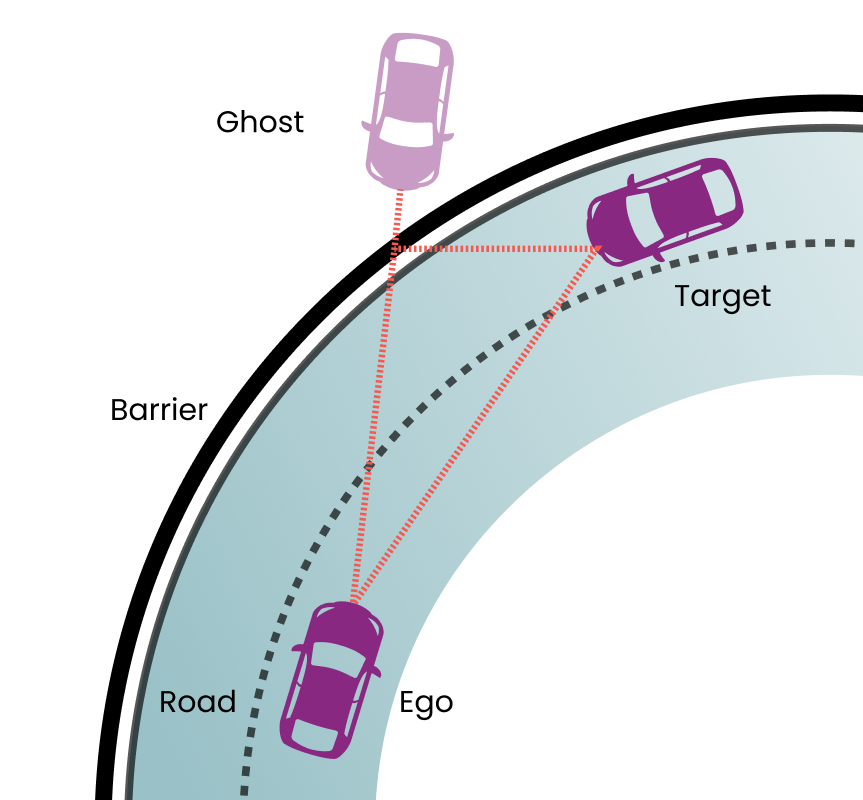

- Radar: The most complex to simulate. Sensor specifics, like multipath reflections off guardrails, wet roads, or barriers, create “ghost” targets, false objects that move realistically and can confuse tracking algorithms. High-fidelity radar simulation requires deep, sensor-specific modeling.

These differences and challenges are exactly why high-fidelity sensor simulation is so complex, and why only a handful of solutions in the market can perform it effectively.

Foretellix + NVIDIA: A Complete Toolchain

The Foretify platform is the industry’s Physical AI toolchain for training, validation, and safety evaluation of AI-powered AV stacks. It combines scenario-driven generation and evaluation with high-fidelity sensor simulation to close the gap between real-world and virtual testing.

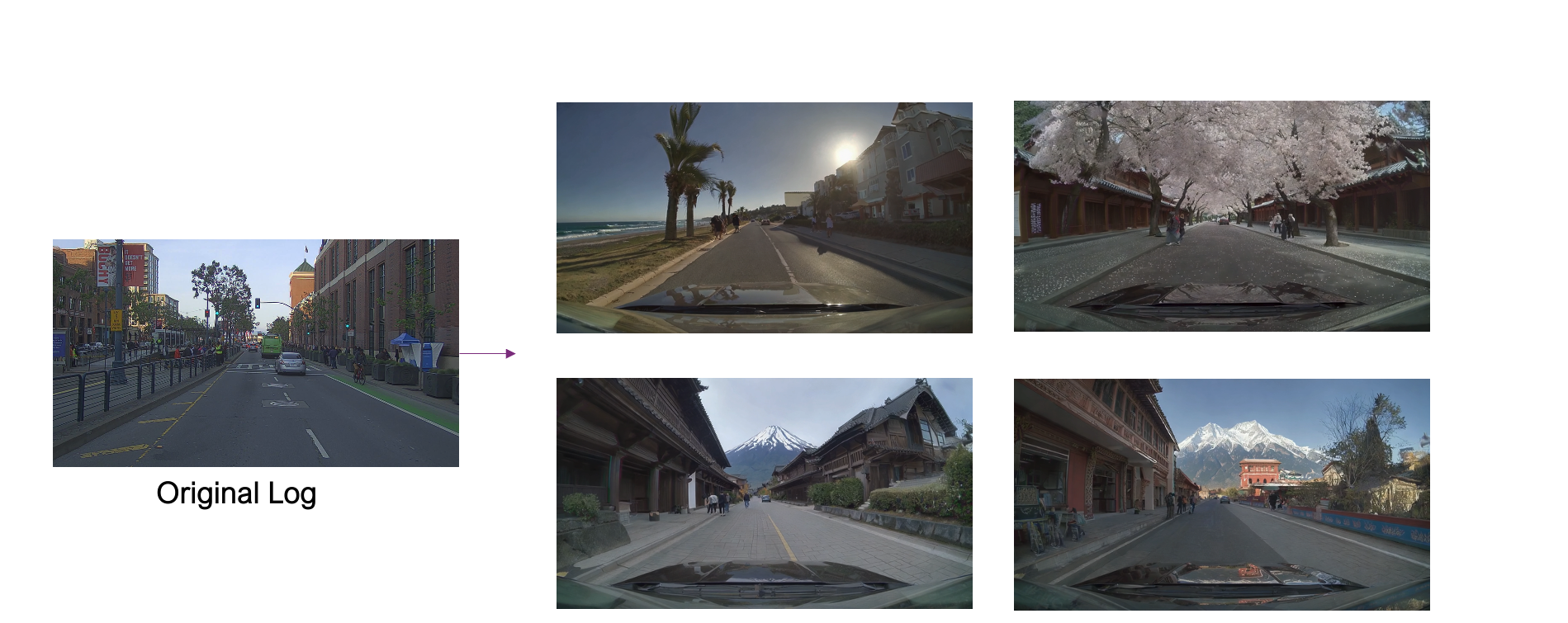

- Foretify Generate: Creates synthetic data and hyper-realistic scenarios at scale, covering edge cases across diverse operational design domains (ODDs).

- Foretify Evaluate: Unifies real-world driving data with simulation results to identify coverage gaps and deliver safety-critical performance metrics.

- Integration with NVIDIA Omniverse, NuRec, and Cosmos: Adds hyper-realistic sensor rendering and vendor-specific models, capturing the behavior of cameras, lidar, and radar with high fidelity. It also enables neural reconstruction, which transforms logged real-world drives into reusable, editable simulation scenes, allowing teams to vary conditions like weather, actors, or sensor placements.

Together, these capabilities give AV developers a data-driven autonomy development toolchain that unifies training and testing. By supporting both open- and closed-loop simulation in the behavior and sensor domains, it enables the generation of diverse training data, accelerates validation, reduces cost, and, most importantly, ensures safe AI-powered autonomy.

The Road Ahead

Sensor simulation is still early in its adoption curve. Today, only a minority of traditional OEMs use it beyond research projects, while new entrants lean on it heavily. Within five years, we anticipate every automaker will depend on sensor simulation as part of daily development.

The fidelity of simulation is also improving rapidly. Sensor simulation is already highly realistic in nominal scenarios and covers many edge cases and physical behaviors, but the industry is now pushing toward near-complete coverage of real sensor behavior. While some physical road miles will always be required, the majority of training and validation will move into the virtual domain.

With rising cost pressures, shorter timelines, and the shift to end-to-end AI stacks, high-fidelity sensor simulation has become indispensable. Foretellix, in partnership with NVIDIA, is helping the industry accelerate this transformation, delivering the realism and flexibility needed to enable safe AI-powered autonomy at scale.

Learn more about Foretellix’s end-to-end toolchain for AV and ADAS validation.

About the Author

Sebastian Klaas – Director of Product Management at Foretellix, drives the strategic development of the Foretify platform. Prior to Foretellix, Sebastian held senior technical positions in Audi, Luminar, and Samsung Semiconductor. He holds a degree in Computer Science from the Karlsruhe Institute of Technology (KIT).

Sebastian Klaas – Director of Product Management at Foretellix, drives the strategic development of the Foretify platform. Prior to Foretellix, Sebastian held senior technical positions in Audi, Luminar, and Samsung Semiconductor. He holds a degree in Computer Science from the Karlsruhe Institute of Technology (KIT).