The new solution enables AV training and validation with high–fidelity sensor simulation.

Ensuring the safety and reliability of autonomous vehicles (AVs) while accelerating time to market and controlling development costs is paramount. Addressing these challenges, Foretellix integrated the NVIDIA Omniverse Cloud APIs to enable high-fidelity sensor simulation on the Foretify™ platform. The new solution revolutionizes the way AVs are trained and validated by providing physically based, high-fidelity, end-to-end simulation at scale. The new solution will benefit both emerging AV stacks that rely on end-to-end AI training and stacks that comprise separate perception and planning subsystems.

This week, NVIDIA and Foretellix are demonstrating the enhanced sensor-simulation workflow at GTC.

“With high-fidelity sensor simulation, the Foretify platform delivers a complete toolchain for training and validating autonomous vehicles,” said Ziv Binyamini, Foretellix CEO and co-founder. “By integrating the NVIDIA Omniverse Cloud APIs, we enable customers to streamline and automate the development process, reduce development costs, and accelerate the deployment of autonomous technologies on a global scale.”

“The AV industry is expanding the use of end-to-end AI models, which require physically based sensor simulation,” said Zvi Greenstein, Vice President of Autonomous Vehicle Infrastructure at NVIDIA. “By leveraging NVIDIA Omniverse Cloud APIs, Foretellix is enhancing its Foretify toolchain with new sensor simulation capabilities and setting a new standard for AV development and validation.”

The Need for High-Fidelity Sensor Simulation

Modern AVs rely on multiple sensors, including video cameras, radars, and lidars, to generate an accurate worldview. The AV must accurately identify, track, and react to many actors, including other vehicles, pedestrians, cyclists, and more. It must also handle real-world aspects such as weather, light and road conditions, and sensor degradation. However, real-world data collection for training and validation is an extensive and costly effort, including test drives, data logging, and labeling.

While object-level virtual simulation is broadly used to validate the planning and control system, sensor simulation is required to validate the perception system. Furthermore, emerging AV solutions rely on sensor data to train and validate the AI end-to-end. However, achieving sensor simulation that accurately reflects the behavior and physics of the real world at scale is incredibly challenging.

Performance and safety issues often result from the interaction between the perception and the planning and control subsystems. When these subsystems are validated separately, some issues can appear only during real-world tests. To ensure the safe operation of the AV, in addition to sub-system validation, the entire system needs to be validated from end to end under a large variability of driving scenarios, perception conditions, and locations.

Virtual End-to-End V&V

Foretellix and NVIDIA can deliver physically accurate, virtual, end-to-end validation at scale by enabling high-fidelity sensor simulation via the Omniverse Cloud APIs. The integration provides seamless access to NVIDIA’s breakthrough sensor simulation performance and Foretellix’s state-of-the-art automated test generation and monitoring technology.

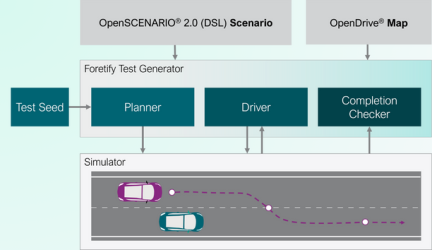

Foretellix customers can leverage abstract scenarios to automate the generation of end-to-end tests, perform large-scale triage, assess performance and KPIs, and measure ODD coverage. Figure 1 below shows a high-level diagram of the test development flow.

The Foretify Core test generator uses abstract scenarios created by customers using Foretify Developer or ready-made scenarios imported from V‑Suites – the industry’s most comprehensive OpenSCENARIO® 2.0 (DSL) library – to generate and execute concrete tests comprised of multiple actors, including maneuvers and perception parameters. It provides mission control information to the AV stack, actor control commands to the simulator, and world-state and perception parameters to the NVIDIA Omniverse Cloud APIs.

The Omniverse Cloud APIs render physically accurate sensor data based on the simulated world state and the provided perception parameters. It delivers the sensor data to the AV stack, which processes it and generates actuation commands for the simulator.

Foretify Core executes the test by controlling the simulator at runtime. It uses advanced behavioral models to dynamically control and adjust each actor’s actions during simulation execution to achieve the scenario intent. Foretify Core is pre-integrated with most industry-leading simulators and can also integrate with OEM proprietary platforms, such as the Nuro simulator, as demonstrated at NVIDIA GTC.

With these new capabilities, the Foretify Platform can measure ODD coverage across multiple perception parameters to analyze and report which conditions have been tested in various driving scenarios. This significantly improves the ability to assess the system’s safety and testing efficiency.

High-Fidelity Reconstruction and Smart Replay of Drive Logs

Companies developing AVs collect a huge amount of drive logs through physical drives. Storing all sensor data requires enormous storage space; thus, often, only object-level information is stored. Foretify LogIQ enables object-level drive log ingestion into the high-fidelity virtual simulation flow to reconstruct and replay a physically accurate sensor-level simulation of the drive log (Figure 2). This provides significant time and cost savings in developing, debugging, and issue resolution. In addition, the Foretify Smart Replay capability can generate variations of the original drive log, including perception parameter variations and maneuver modifications, to improve the validation of new AV software versions.

Generate Synthetic Data Sets for Training

Retraining AI systems is often required when the AV underperforms in certain conditions, such as unknown edge cases found during validation in physical driving or virtual simulation. Using Foretellix Foretify and NVIDIA Omniverse Cloud APIs, developers can now automatically generate a large number of sensor-accurate data sets to train the AV. This is especially useful when it is unsafe, costly, or difficult to recreate similar conditions in a test drive.

Enhance Safety, Improve Time to Market, and Reduce Development Costs

The Foretify platform with Omniverse Cloud APIs powers a major shift in AV testing, accelerates AI training, enables much higher utilization of drive logs, and improves the synergy between physical drives and virtual testing. It enables OEMs and AV developers to perform end-to-end, physically accurate simulations to help improve AV performance and safety, accelerate time to market, and reduce development costs.

To learn more about the Foretify platform, visit www.foretellix.com/foretify-platform/. To learn more about NVIDIA Omniverse Cloud, visit www.nvidia.com/en-us/omniverse/.